I’d like to share with you a new/old project I’m currently working on. It’s current working title is MIDI VR. In short, it allows the user to plug a MIDI keyboard into their computer, and experience immersive 3D visualisations of the music they play as they play it.

MIDI VR is a unique project in terms of its history and meaning to me – I actually learned Unity originally by working on this project, some years ago. However, I was using some very hacky techniques to actually get the MIDI data into Unity (for those curious, I was getting the MIDI data into Processing, converting it into OSC in real-time, and getting that OSC data into Unity). In fact, the libraries that I was using in Unity wouldn’t build, so the project was confined to the Unity editor.

I eventually abandoned the project, coming to the hard-to-swallow realisation that a project confined to my computer wasn’t really worth devoting too much time to. So I took all the code, parcelled it off to other projects (My favourite visualisation that I’d been working on went on to become GEOM), and put the project to bed (which at the time, was just called ‘keyboard’).

Fast forward a few years – I’m a significantly more experienced Unity programmer, very familiar with the DK2, and a lot more comfortable working with, and understanding, external libraries. I stumbled upon MIDI.NET, and realised that if it worked how I imagined it would, then I could return to my original project! I built a quick little test application, and…yes! I had MIDI data in a standalone .exe built from Unity. The project was back on!

History aside, I’d like to share the current state of the project. I’ve probably spent about 6 hours total on it thus far, so it’s very early days – I’m still in proof-of-concept mode.

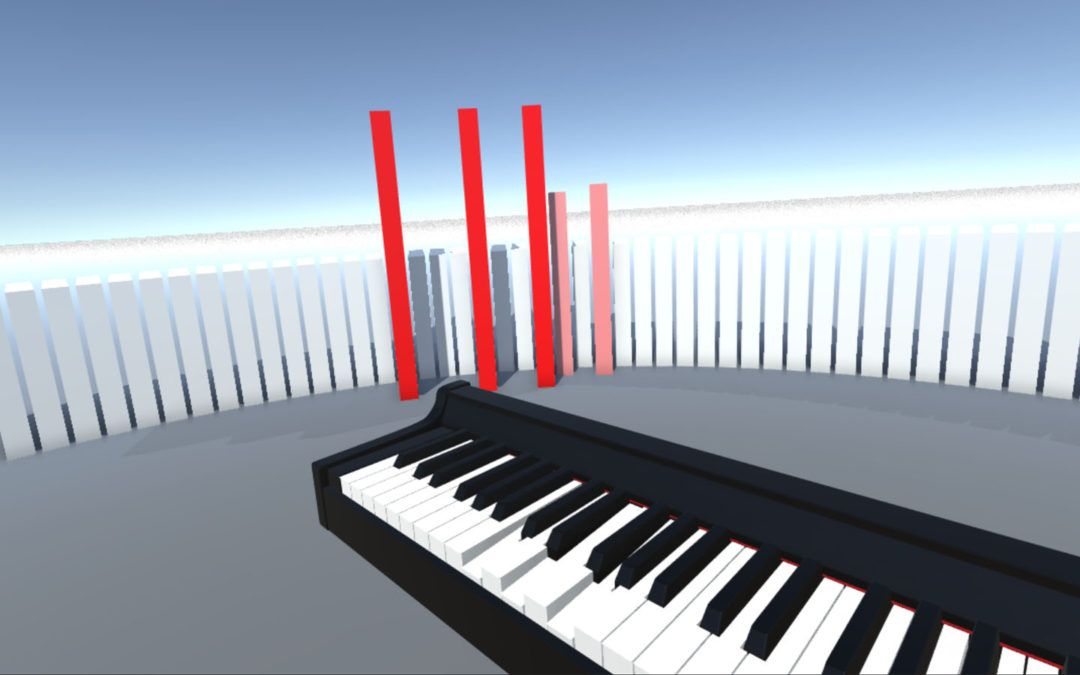

I currently have a dynamic piano keyboard with mostly final models of the keys – the user is able to put in the dimensions of their particular keyboard to ensure that the VR representation will match it. Playing keys will cause those keys to press down in VR.

I also re-used my old ParameterTracker class from the original keyboard project – it tracks the user’s playing and determines how fast, loud and wide (how far apart the keys they’re playing are) the user is playing as compared to their global average. It also determines the key they’re playing in. This is the data that’s useful for larger-scale, more fuzzy visualisations.

As a quick little test of moving data around the program, I built a semicircle of cubes, each corresponding to a MIDI pitch. Their scale and colour change when the note they are assigned to is played.

So everything is working very well, and the next step is to get some real visualisations happening! I don’t have nearly as much time to devote to personal projects these days as I did back when I first learned Unity, so I’m probably going to focus on quick little visualisations for now, each exploring a different idea. I picture the final version of this program with at least 10 different visualisations in it that players can enjoy.

One final obstacle – my Rift cable isn’t long enough for me to sit at my piano while wearing the Rift, so I can’t test it for real, only lean over and mash some of the low-notes. I have a short HDMI extension cable coming in the mail – I’ve heard of people having mixed success extending the DK2 cable, but my options are that, or move my piano to a somewhat awkward position perpendicular to my desk, which I’m not keen on. So wish me luck!